Artist Reflection: Emotional Data as a System

October 26, 2016, 00:00

As I begin my Eyebeam Project Residency at BuzzFeed, I'm planning to create (at least!) three prototypes that span design, ethics and machine learning. Here are some of the ways I'm trying to wrap my head around this burgeoning field.

Caroline Sinders is past Eyebeam resident through a joint residency with BuzzFeed’s Open Lab. She's an artist and machine learning designer from New Orleans, LA, and is currently based between San Francisco and Brooklyn.

She holds a BFA in photography and imaging from NYU’s Tisch Photography and Imaging Program and a MPS from NYU’s Tisch Interactive Telecommunications Program. She is currently exploring surveillance, conversation, politics, online harassment and emotional trauma within digital spaces and social media. Her core project at the Eyebeam BuzzFeed OpenLab is a working prototype with Angelina Fabbro on around using commenting data from various journalistic entities and social media data to mitigate harassment using machine learning.

___________________

As a resident at Eyebeam and BuzzFeed’s Open Lab, during this year I’ll be exploring ways of how to apply design into machine learning and personal data—here are just three of the questions I’m trying to work out.

1 Understanding machine learning through better design

Within machine learning, the data that informs the algorithms is constantly changing while the structure of the product/piece/space/thing remains stable. The barebones of the machine learning structure is not altered significantly since the structure of the algorithms and the data types remains constant. That said, the actual manifestation of that data often changes. That explanation was too long—I want to make it easier. Machine learning is a lot like dealing with organisms or nature: things are constantly shifting. I like to think of training machine learning like trying to teach a toddler to do something over a long period of time. Children can often hold onto arbitrary details, and machine learning can do that same thing from data sets. The organic nature of machine learning is so incredibly interesting and my open source templates and prototype will be experiments into how machine learning can be better understood by all different kinds of people. I’m calling this work ‘temperamental ui’ to explore the evolving nature of what happens to machine learning UI and UX when the data sets start to change from user interactions.

2 Citizen surveillance of surveillance cameras

I’m photographing, identifying, and mapping all of the surveillance cameras in San Francisco neighborhoods by running a neural net over the gathered visual and geographical data set. I’m interested in finding correlations in demographics of surveilled areas. What will an algorithm pick up? Is it placement, is it location, is it colors, is it kinds of cameras? I started off my career as a photographer, and now I want to take a closer look at the cameras the fill our cities. Instead of taking photos from Google’s bird’s eye view, I’ll be trying to stay grounded and photograph them as people would encounter them—this project is part of a series of VR games called Dark Patterns that I am building with Mani Nilchiani.

2 Citizen surveillance of surveillance cameras

I’m photographing, identifying, and mapping all of the surveillance cameras in San Francisco neighborhoods by running a neural net over the gathered visual and geographical data set. I’m interested in finding correlations in demographics of surveilled areas. What will an algorithm pick up? Is it placement, is it location, is it colors, is it kinds of cameras? I started off my career as a photographer, and now I want to take a closer look at the cameras the fill our cities. Instead of taking photos from Google’s bird’s eye view, I’ll be trying to stay grounded and photograph them as people would encounter them—this project is part of a series of VR games called Dark Patterns that I am building with Mani Nilchiani.

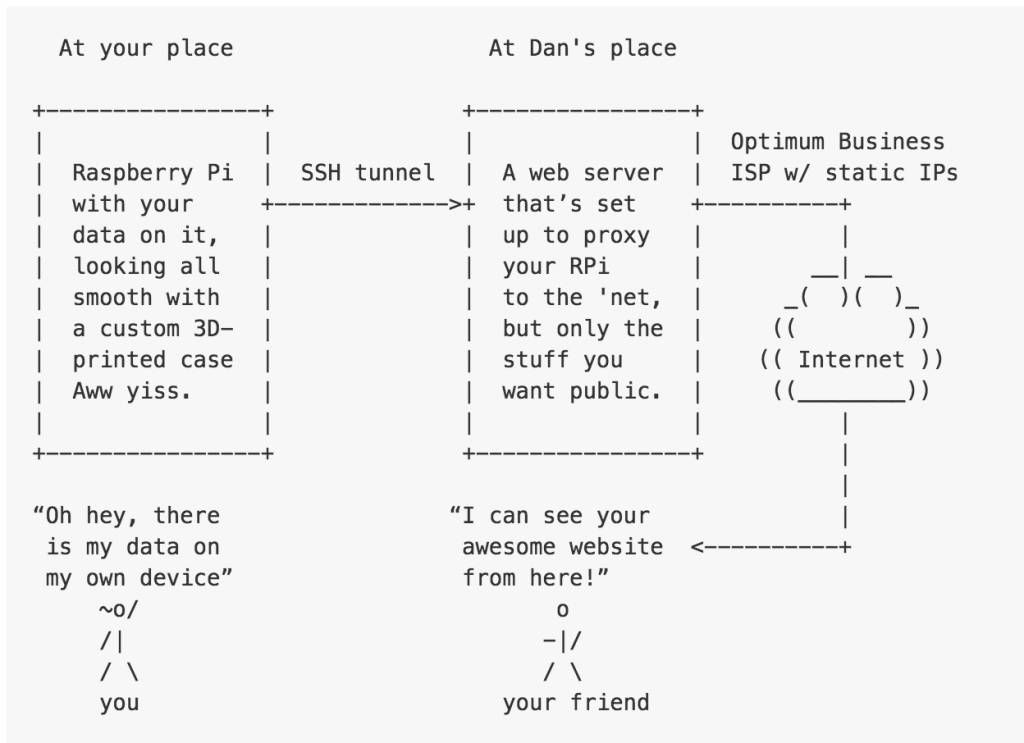

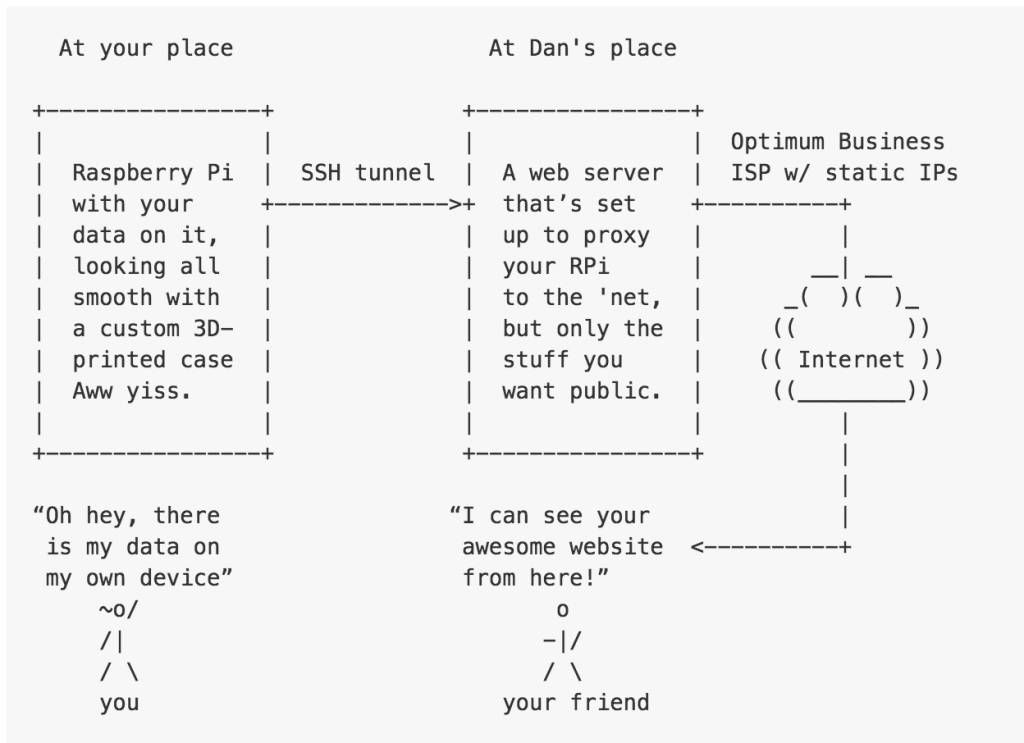

3 Ownership of the data that we create

Right now, our data is owned by the platforms that we give it to—usually so they can resell it as big data to advertisers. What would an alternative model using “small data” look like, where everybody owned their data in a platform cooperative? Dan Phiffer, an Eyebeam Impact Resident, and I are building a small series of prototypes on raspberry PIs. We’re trying to work out the legal complexities of data collectivism. I’m excited to build something really small, something really really small, something that isn’t meant to scale up, and something that is meant to be egalitarian for users. It’s like making a zine for a systems designer.

3 Ownership of the data that we create

Right now, our data is owned by the platforms that we give it to—usually so they can resell it as big data to advertisers. What would an alternative model using “small data” look like, where everybody owned their data in a platform cooperative? Dan Phiffer, an Eyebeam Impact Resident, and I are building a small series of prototypes on raspberry PIs. We’re trying to work out the legal complexities of data collectivism. I’m excited to build something really small, something really really small, something that isn’t meant to scale up, and something that is meant to be egalitarian for users. It’s like making a zine for a systems designer.

A patchwork virtual space created by Caroline Sinders and Mani Nilchani.

Get updates from Caroline Sinders!

A patchwork virtual space created by Caroline Sinders and Mani Nilchani.

Get updates from Caroline Sinders!

2 Citizen surveillance of surveillance cameras

I’m photographing, identifying, and mapping all of the surveillance cameras in San Francisco neighborhoods by running a neural net over the gathered visual and geographical data set. I’m interested in finding correlations in demographics of surveilled areas. What will an algorithm pick up? Is it placement, is it location, is it colors, is it kinds of cameras? I started off my career as a photographer, and now I want to take a closer look at the cameras the fill our cities. Instead of taking photos from Google’s bird’s eye view, I’ll be trying to stay grounded and photograph them as people would encounter them—this project is part of a series of VR games called Dark Patterns that I am building with Mani Nilchiani.

2 Citizen surveillance of surveillance cameras

I’m photographing, identifying, and mapping all of the surveillance cameras in San Francisco neighborhoods by running a neural net over the gathered visual and geographical data set. I’m interested in finding correlations in demographics of surveilled areas. What will an algorithm pick up? Is it placement, is it location, is it colors, is it kinds of cameras? I started off my career as a photographer, and now I want to take a closer look at the cameras the fill our cities. Instead of taking photos from Google’s bird’s eye view, I’ll be trying to stay grounded and photograph them as people would encounter them—this project is part of a series of VR games called Dark Patterns that I am building with Mani Nilchiani.

3 Ownership of the data that we create

Right now, our data is owned by the platforms that we give it to—usually so they can resell it as big data to advertisers. What would an alternative model using “small data” look like, where everybody owned their data in a platform cooperative? Dan Phiffer, an Eyebeam Impact Resident, and I are building a small series of prototypes on raspberry PIs. We’re trying to work out the legal complexities of data collectivism. I’m excited to build something really small, something really really small, something that isn’t meant to scale up, and something that is meant to be egalitarian for users. It’s like making a zine for a systems designer.

3 Ownership of the data that we create

Right now, our data is owned by the platforms that we give it to—usually so they can resell it as big data to advertisers. What would an alternative model using “small data” look like, where everybody owned their data in a platform cooperative? Dan Phiffer, an Eyebeam Impact Resident, and I are building a small series of prototypes on raspberry PIs. We’re trying to work out the legal complexities of data collectivism. I’m excited to build something really small, something really really small, something that isn’t meant to scale up, and something that is meant to be egalitarian for users. It’s like making a zine for a systems designer.

A patchwork virtual space created by Caroline Sinders and Mani Nilchani.

Get updates from Caroline Sinders!

A patchwork virtual space created by Caroline Sinders and Mani Nilchani.

Get updates from Caroline Sinders!